ETHICS, GROWTH AND CHANGING ATTITUDES - WILL ARTIFICIAL INTELLIGENCE TAKE OVER THE WORLD?

By Mia Borgese

I attended a talk at Brunel University on AI given by Amir Khan, Dr Tatiana Kalganova, Professor Joseph Giacomin and Professor Will Self, which taught me many of the ideas in this article, and it lead to me to think about many things.

I want to consider whether AI can and will ever take over the world.

Firstly, it is important to consider if it will be widely used enough to take over the world. In recent years, the world has experienced an extreme growth in artificial intelligence. This is due to three main reasons:

1. There are many good data models nowadays (many mathematically developed algorithms)

2. There is lots of data available to us (the growth of this is an exponential increase)

3. Processing power is incredible – cloud computing is an increasing industry.

If you consider these three ideas you get artificial intelligence.

Next, we must consider attitudes and how willing people today are to accept AI into their homes which make us as a global population susceptible to an AI take-over. The attitudes to AI have changed dramatically over the last twenty or so years, both in comfort and perception of AI. It has only been accepted into society recently - today, 70% of people are happy with having any AI in their life. In the 20th century, what would come to mind when hearing the world ‘design’? It would be aesthetics such as fashion, shoes furniture. Today, it would also be technology – efficiency, products, how best to optimise it; generally much more complex ideas than just 20 years ago. (It is difficult to say whether the growth established the positive attitudes, or vice versa. I guess it’s a chicken and egg scenario.) These attitudes of possible naivety mean people would be more vulnerable to a robot take-over.

We will now consider how far its abilities go in relation to humans’. AI offers creative ways of looking at the world. Many people say that AI can never take over the world because humans are more creative. However, I disagree. Before reading on, take a look at these four paintings. Two were created by AI, which were, as with all AI, programmed with algorithms, and two were made by humans. Can you tell which two are which?

It was, in fact the top two that were created by AI. Looking at them, they look pretty human-like, right? And the fact that you were probably unable to tell which were made by a human and which were made by AI with conviction is worrying. Now AI can produce things like humans. They can mimic the creativity and individuality of our species. This allows humans to unlock creativity beyond the imagination. Another good example of this is Morgan Studios – they use AI to inspire AND produce their furniture – take a look at this rather modern looking chair and table set.

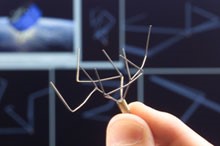

But how useful it is really in our world? AI has allowed us to enhance our knowledge – for example, a team at NASA took inspiration from nature to develop algorithms and implement them into AI, which then went on to develop antennas, which we could never have made by our theories. AI is used in so many ways to enhance our knowledge and understanding of the world.

AI is used in nearly every area of our life nowadays. When we hear information about volcanic eruptions on the news – this is AI; it can learn from data collected from different sources such as GPS signals to predict where ashes from volcanoes will affect the worst. When we see the order time-window as we order a product online and it is delivered in that exact time frame – this is AI; it is used in large delivery schemes for example by Amazon, for optimisation to ensure that delivery is when stated (accurate delivery) and a lower price. This is all from AI.

Humans nowadays have a choice. They can have a non-thinking tool – one that is simple and has one purpose, for example, a spanner. However, we can also choose to have a ‘sentient assistant’ as Professor Joseph Giacomin put it – one that can do some things that humans cannot; can think what we can. These objects have the capacity to make decisions and unlike the non-thinking tools they have more than one way of operating. Thus, clever decisions are being made from software algorithms all the time that we are unaware of. For example, with a toaster, it is doing so many logical operations and algorithms in the background we do not even notice.

And in fact, many humans have become so reliant on and involved in these objects, as they seem so intellectual, that people begin to treat them well like they would a human, for example, when some people get home from work, they greet their Amazon Alexa.

However, things could get out of hand. How do we know these sentient assistants are transparent? That they are honest when they say they are doing what they are doing? How can we ensure that they are not making mistakes? Self-driving cars can learn from humans, and what if they pick up a bad habit? Therefore, is it wrong to rely on them so much? (Some people are pushing for a scenario in which humans are unable to make life or death decisions e.g. nuclear bomb legislation).

To assess the issues raised, there is an ethical guide that can be implemented when designing AI outlining problems that can occur entitled ‘Guide to Ethical Design of Robots’. Here are some of the dangers listed in it:

o Loss of trust – This means that the robot should not communicate in a way that seems untrustworthy.

o Privacy and confidentiality – Sometimes these devices record and return the data they attain to their company by mistake. This happened with Siri - all customers’ data was sent to Apple. Think of Amazon Alexa – for her to hear when her name is being called, isn’t she then listening to what you are saying all the time? The Siri case was not a malicious intent, but a mistake, (but raises issues over who owns this data. Due to these issues, nowadays it is said that “PRIVACY is a LUXURY” because we don’t own our data).

o Lack of respect for cultural diversity and pluralism – This means that one should make an AI that when interacted with will respect every single user’s lifestyle or background – this includes religion, culture, clothes, eating habits, e.t.c. Thus it should not offend anyone.

o Robot addiction – It should not go so far so that people don’t want to carry out jobs themselves.

o Dehumanisation of humans in the relationship with robots – People do not want to feel as if robots are cleverer than them. For example, it wouldn’t feel great if the cleverest person in a group of people working together was the robot.

o Self-learning system exceeding its remit – Many forms of AI are self-learning. What if self-driving cars learn to drive better than, or different to us? Robots can sometimes be too smart and learn things that the creator never intended them to. For example, a self-learning car could pick up bad human habits, e.g. speeding, cutting off pedestrians at crossing, or what is bad in humanity e.g. racism.

o Environmental awareness – Sometimes a robot may be following its algorithms, and carrying out the job it is meant to brilliantly, but may not realise it is causing harm to people; the environment e.t.c.

As Giacomin said, the devil is in the detail. And clearly this is very much the case.

So, will AI take over the world?

There is an idea that since the Industrial Revolution, we can do things similar to God’s creation of life, for example, Frankenstein. But what if upon doing this and releasing our creations into the world something unpredictable happens? What if our creations turn against us with malicious intent? How to avoid this issue, I cannot say broadly speaking, because the action you take is a very specific thing to each thing you are making and its purpose. However, here are some arguments as to why robots taking over the world can never happen.

Professor Will Self believes that technology is not driven by human intention, but instead by the primary needs of the phenotype, or the individual and their genetic information; we use tools to further our phenographic role of survival. Thus, technology is beyond good and evil and that this ‘taking over the world issue’ is one confused by consciousness, synonymous with our individuality. Consciousness is oddly collective, and we have evolved to be that way; consciousness has evolved in our phenotypes for instinctual survival.

Another way of addressing this issue is considering how alike robots are to humans. Some people believe it can never happen because of the Cartesian idea that mind is partly made of a non-material thing, therefore AI can never had a mind and so can never take over the world. This links to the idea that robots can only have a soul if they are fully integrated into the human species, i.e. if they are able to e.g. make young, be part of natural selection. They aren’t, thus they don’t have soul. If we achieve AI to have a meaningful consciousness, it will become capricious, irrational, fall in love e.t.c, like humans. But robots can’t really be like us because of the way we are embodied.

So, to conclude with my opinion:-

Can machines think? And what does this say about the human mind? And if we can make robots think, should we? I think language is a fickle thing – what do we mean by ‘think’? I believe machines can think but only in the confines of what they have been taught and programmed to do. I think that robots can copy what many people think they can’t: the individuality and creativity of humans. But I think a robot takeover can never occur because they can’t have a meaningful consciousness as they lack human emotion and are not integrated into the human species, being able to biologically do all the things we can, due to how we and they are embodied and because there is a limit to their emotional confines. And, rather controversially, I do believe that in the future, science and technology will develop enough so that we will be able to give robots emotions, after all hormones e.t.c. are just electrical impulses. BUT, just like the issue of genetically cloning humans which may be possible but has not been attempted because it could be unethical, I believe people wouldn’t implement emotions into robots because it would be unethical and perhaps made illegal as authorities would attempt to avoid the consequences of robots having emotions, such as a consequent malicious intent and so a take-over. And perhaps people will do it illegally when it is possible, but it will never be done on a large enough scale for a take-over.

Because of this, I believe that a robot take-over may be possible but fortunately, or unfortunately for some, it will never happen, but I don’t believe it is just an entirely far-fetched idea from some science fiction novel…