ARTIFICIAL INTELLIGENCE - A HISTORY

By Vedant Nair

People are lazy. The literal purpose of technology is to account for this in our lives. Philosophers argue what the point of life is, but in the meantime, scientists focus on how to make our lives easier.

So, since the 1940s the first seeds of artificial intelligence were planted, as part of an invention of a programmable digital computer. Since then true artificial intelligence has been something of dream. The ability for machines to do humankind’s thinking for it would achieve absolute freedom, there would be no need for people to work, no need for them to exert, it would be a world without mental labour, a world with no worries or stress.

Then what’s stopping us? It’s not as easy as creating a program or sitting down with a brain and your laptop and spending a few hours on it before work. Research has shown that the brain has a minimum of one hundred trillion (one with twelve zeroes) neural connections, far beyond the number of stars in our galaxy and far too many variables to program. Such an immense scale meant that early researchers’ projects like Walter’s Turtles and Hopkin’s Beast would fail, because they used analog circuitry.

They attempted to use physical circuitry and moving parts in an attempt to replicate the brain’s activity. The processing power and data thresholds required were far beyond anything even remotely possible at the time, and if they were to build a machine with the same number of connections as the brain it would have had to be bigger than the size of the Earth, with wires thousands of kilometres long.

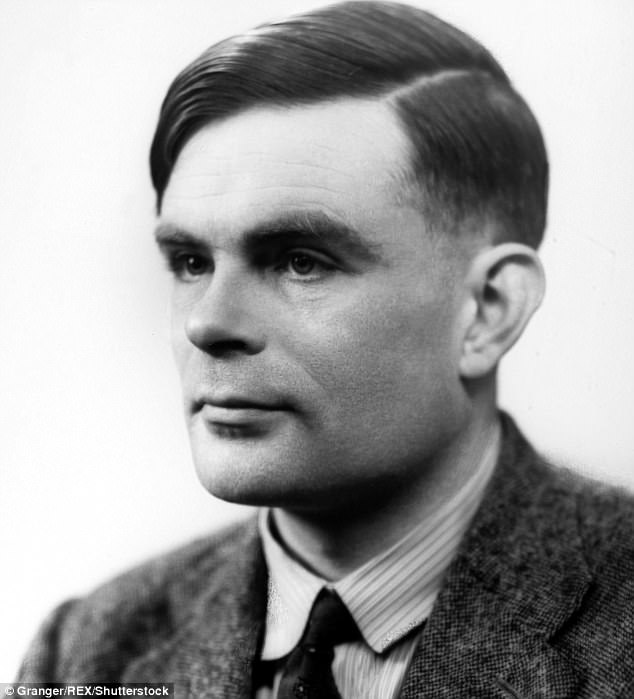

Alan Turing

One of the first breakthroughs was during World War Two, when German communicators created an encryption method known as Enigma. This used a code that substituted letters for other letters in accordance to a code that changed daily. It was a cryptographic miracle because the Allies didn’t have a challenging time breaking the code but struggled to do so within the twenty-four-hour time limit. Alan Turing was a revolutionary computer scientist which had two key impacts, among many, on the world of artificial intelligence.

First, he broke the Enigma. This was done by identifying a key pattern within the German communications: every morning they sent a weather report, and every morning the Allies’ interceptors were easily able to decrypt this information. Turing understood that the ability to decrypt any one part of an encryption would allow him to decrypt the entire message.

He had created a machine, the first successful form of artificial intelligence: the Turing machine. The machine analysed patterns within the weather report and found the translation code enabling any communications to be decrypted within the day, until the code changed again. Secondly, after building the first Turing machine, and its successors, he developed the Turing test.

It’s a test that scientists still use today to assess the effectiveness of a form of artificial intelligence, on the basis that if the tester was unaware that machine was in fact a machine, and if the machine is self-aware that it is a machine. This is useful because it provides a standard of artificial intelligence in which scientists can compare different artificial intelligences.

The development of algorithmic machines like the Turing machine brought forward the key problem mentioned earlier, if the Turing machine was to be used in application further than just mathematics, it would need to be much, much bigger. A condition that allowed this problem to be simplified was the change from analogue machinery to digital machinery with three key developments; noise; precision and design.

Firstly, analogue circuitry is far more susceptible to noise than digital circuitry, because analogue circuitry has multiple stages, whereas digital circuitry on has a binary set up: zeroes and ones. This means that the only thing that can disorientate digital signals is a signal strength that happens to be half a magnitude between the signals (e.g. 0.5 rather than 1). Secondly, the precision of a digital circuit is far superior to that of analogue circuitry.

A digital signal can have a value of numbers far larger than that of analogue signals allowing the signal of 1.5555589 Hz to be more precise than just 1.55556 Hz. Finally, the designs of digital circuits can be more simple than analogue ones, therefore they are easier. Because of this, since their discovery they have been far more common than analogue circuits. This has affected artificial intelligence because a far bigger AI program could exist on a pen drive than one the size of a room.

In addition to this, a key stage in the development of artificial intelligence was reached. Due to the relative simplicity in programming a digital program to record its previous steps, AI could now learn. This means that instead of a programmer inputting thousands of commands, the program could interact with external codes, representing situations. Through this form of programming, time could be saved vast amounts of time could be saved and AI would be closer to the actual landmark of intelligence: learning.

It is important to understand artificial intelligence’s history on the world, but it is vital to understand its current and future impacts. AI revolutionised computing and programming as we know it and it’s affecting our daily lives from basic apps to medical wonders.